This weekend we performed a quantitative analysis to evaluate the accuracy of drone based photogrammetry results. For some time I’ve been very interested to see how well a photogrammetrically derived point cloud would identify the tops of trees or man-made objects, to see how useful this technology may actually be in the performance of obstruction surveys. I want to strongly emphasize this research project was not completed near an airport. Flying a UAV near an airfield is extremely dangerous. Our test site was carefully selected at a secluded location, miles from the nearest airfield.

We utilized a Geopro customized multi-rotor carrying a 12.4mp camera with 20mm focal length flying at an AGL of 100 meters. The 4000×3000 resolution photos resulted in a ground sample distance (GSD) of 4.3 cm (0.14 feet) per pixel. This height was selected due primarily to my comfort level; I wanted to stay below 400 feet and a GSD under 5cm seemed like a good idea.

We began by setting photogrammetric ground control around the site, consisting of eight points around the perimeter and center of the project. We collected the points using a Trimble R8 Model 3, receiving corrections from the Ohio VRS network. I assume the final positions are accurate within 2cm both horizontally and vertically, though we have not rigorously verified the positions. Work was completed in Ohio State Plane South using metric units of measurements. Geoid 12B was utilized to compute orthometric heights.

Next we established temporary survey points and utilized a recently calibrated 1” Trimble total station (5601) to measure the top of objects using Direct-Reflect technology. In all we shot the top of 36 trees, 6 posts, and two light poles. Some of the trees were in full leaf-on condition, others were dead or unifoliated.

After setting the points we launched our drone, the camera setting were manually applied:

- ISO 100 to get clean photos with minimal noise

- Aperture limit of f/2.8

- Shutter speed limit of 1/400 second

In total we collected 127 photos across the project area, flying two missions at 90 degrees to each other. The images are generally sharp with minimal motion blur.

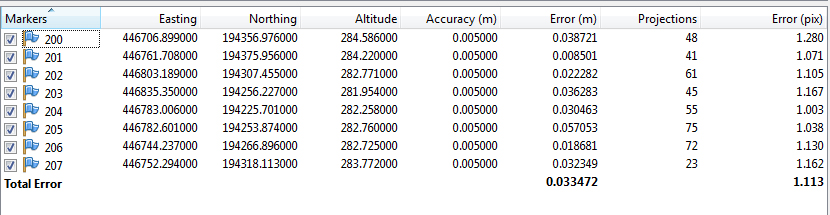

The photos were then loaded into Agisoft Photoscan and processed on ultra-high settings to avoid any image quality reduction. The GCPs and photo alignment results in an average reference point error of just over 3cm.

The final point cloud had just under 79 million points. These points were exported as a .LAS for analysis. A height-field model was then produced for visual inspection:

The resulting model looked impressive.

The resulting model looked impressive.

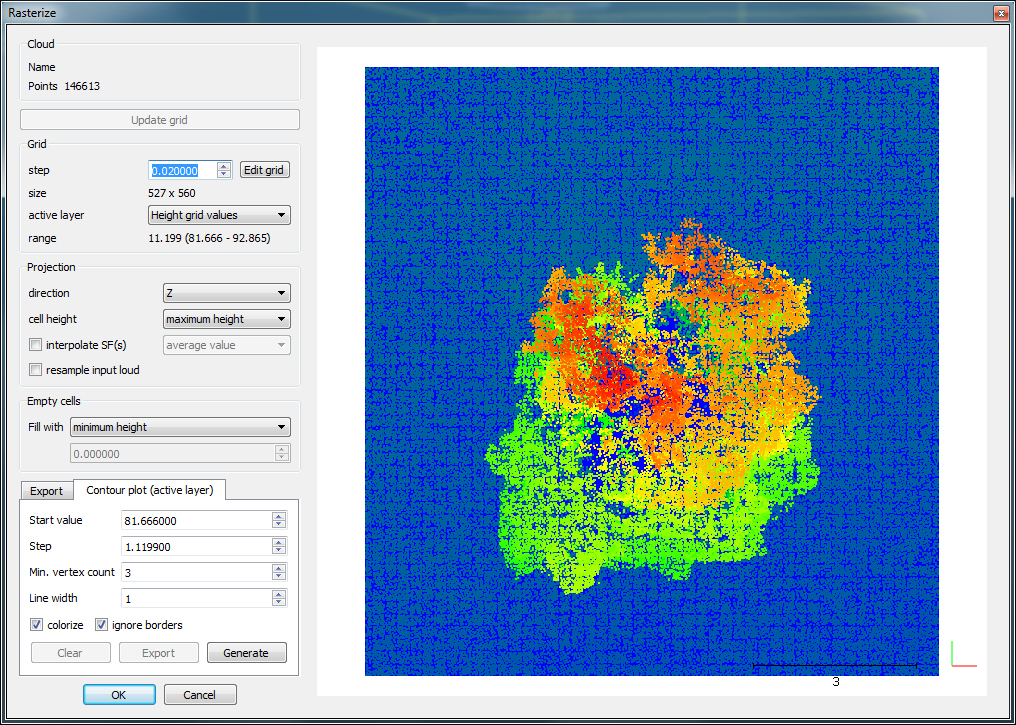

The exported .LAS file and terrestrially measured points were then loaded into Cloud Compare. The area around each point was segmented into its own cloud for analysis using the height-field rasterization tool using a 2cm grid and projecting the maximum height.

Cloud Compare’s Height Field Tool

Cloud Compare’s Height Field Tool

Note, Cloud Compare required a coordinate shift during import in order to maintain precisions. A -200m offset was applied as a simple shift, 292.865 becomes 92.865.

The results of the comparisons can be categorized by object type:

Man-made objects typically were not accurate unless they have a cross sectional area substantially larger than the GSD. Not a single post (10cm diameter) was identified by the photogrammetric software, on average missing by 2.21 meters (7.25 feet), the height of the post. Street lights with their large (60cm) top housing were accurately measured within 0.25 meters (0.82 feet). It is clear that for an object to be accurately measured, it must have a cross-sectional diameter greater than 2 times the GSD. In this case the GSD was 4.27cm and a 10cm post was not detected. I may do more testing to try to confirm or better approximate this requirement but is seems the sampling theorem may generally still be true here.

Trees with substantial and healthy canopy were measured reliably, on average within 0.45 meters (1.48 feet):

Trees with dead or no leaves were not accurate, on average missing by 2.72 meters (8.9 feet): The above tree may look accurate, but Agisoft missed the top of tree by 2.51m (8.2ft).

The above tree may look accurate, but Agisoft missed the top of tree by 2.51m (8.2ft).

The following table compiles the results:

| Description | Count | Average | Best | Worst |

| Trees (healthy) | 30 | 0.43 m | 0.03 m | 1.56 m |

| Trees (no leaves) | 6 | 2.72 m | 2.30 m | 3.50 m |

| Posts | 6 | 2.21 m | 2.11 m | 2.31 m |

| Street Light | 2 | 0.27 m | 0.28 m | 0.26 m |

Looking at the results in their entirety, without differentiation between object types, it is worth noting the average vertical error was 1.08 meters (3.36 ft), just slightly more than the FAA 1A accuracy code.

Note: No evergreen trees were mapped as part of this effort, but based on their geometry, I would guess they tend to be similar to unfoliated tree results and would also be unsuitable for this approach.

My conclusion is that while drone technology offers a lot of promise, it cannot be exclusively relied upon, especially when man-made objects or slender natural features exist. A power pole without lighting or an aerial wire, for example, would go completely undetected using his drone technique. Those very dangerous objects are critical and must be accurately located during an obstruction survey, but a drone only approach would likely miss them completely.

It is important that the drone imagery be further analyzed by trained photogrammetrists to identify and measure missing obstacles. Also critical is the quality control measures performed by ground-surveyors, to measure obstacles using terrestrial techniques and ensure that no errors occurred during the automated processing of drone images. Relying on the software alone to compute obstructions could be a potentially disastrous risk, based on what we’ve seen here. With lives on the line it is essential that specially trained and skilled professionals are performing the analysis and understand the limitations of the technology.

I am wondering if you have done any further testing.

I have to wonder if an oblique camera angle would improve the results, as i have seen objects like cell towers modeled accurately using this technique.

While a Drone only approach is almost never a real solution, even at the accuracy you were finding it seems like would be a valuable tool for determining the potential extent of obstructions. As I am sure you have also found doing airport approach work we often encounter the situation where we identify obstructions (trees) using terrestrial methods, only to find that there are more “hiding” behind those that you cannot see until you remove the initial trees. It seems like this has real potential to let you “see” what is behind that front row.